Latest Blog Posts

Mostly Dead is Slightly Alive: Killing Zombie Sessions

Posted by Lætitia AVROT on 2026-03-04 at 00:00

pg_semantic_cache in Production: Tags, Eviction, Monitoring, and Python Integration

Posted by Muhammad Aqeel in pgEdge on 2026-03-03 at 04:20

Part 2 of the Semantic Caching in PostgreSQL series that’ll take you from a working demo to a production-ready system.

From Demo to Production

In Part 1, we set up pg_semantic_cache in a Docker container and demonstrated how semantic similarity matching works. In summary, semantic caching associates a string with each query that allows us to search the cache by meaning instead of by the exact query text. We demonstrated a cache hit at 99.9% similarity and a cache miss at 68% (configurable defaults), and discussed why this can make a difference for LLM-powered applications.Now let's make it production-ready. A cache that can store and retrieve is useful, but a cache you can organize, monitor, evict, and integrate into your application is what you actually need for a production deployment. In this post, we'll cover all of that.We'll continue using the same Docker environment from Part 1. If you need to set it up again, refer to the Dockerfile and setup instructions in that post.Organizing with Tags

Tags let you group and manage cache entries by category; the at the end of the cache entry contains the tags associated with a specific query. For example, tags in our first query:Identify the query as being associated with and .In our second query, the tags identify the query as being associated with and :We can view the tags with the following statement:When the underlying data changes in our backing database, we can then use the tags to Invalidate old content in our data set:Eviction Strategies

Caches need boundaries. You can use those boundaries to keep data sets fresh:pg_semantic_cache provides eviction strategies you can use to set those boundaries for your cache:For easy maintenance in a production environment, you can schedule automatic cache clean up with pg_cron:Monitoring

The extension provides built-in views for observability. The semantic_cache.cache_health view provides an overview of the number of entries and use of a given cache:The semantic_cache.recent_cache_activity view provide[...]INSERT ... ON CONFLICT ... DO SELECT: a new feature in PostgreSQL v19

Posted by Laurenz Albe in Cybertec on 2026-03-03 at 04:00

© Laurenz Albe 2026

PostgreSQL has supported the (non-standard) ON CONFLICT clause for the INSERT statement since version 9.5. In v19, commit 88327092ff added ON CONFLICT ... DO SELECT. A good opportunity to review the benefits of ON CONFLICT and to see how the new variant DO SELECT can be useful!

What is INSERT ... ON CONFLICT?

INSERT ... ON CONFLICT is the PostgreSQL implementation of something known as “upsert”: you want to insert data into a table, but if there is already a conflicting row in the table, you want to either leave the existing row alone or update update it instead. You can achieve the former by using “ON CONFLICT DO NOTHING”. To update the conflicting row, you use “ON CONFLICT ... DO UPDATE SET ...”. Note that with the latter syntax, you must specify a “conflict target”: either a constraint or a unique index, against which PostgreSQL tests the conflict.

You may wonder why PostgreSQL has special syntax for this upsert. After all, the SQL standard has a MERGE statement that seems to cover the same functionality. True, PostgreSQL didn't support MERGE until v15, but that's hardly enough reason to introduce new, non-standard syntax. The real reason is that “INSERT ... ON CONFLICT”, different from “MERGE”, does not have a race condition: even with concurrent data modification going on, “INSERT ... ON CONFLICT ... DO UPDATE” guarantees that either an INSERT or an UPDATE will happen. There cannot be a failure because — say — a concurrent transaction deleted a conflicting row between our attempt to insert and to update that row.

An example that shows the race condition with MATCH

Create a table as follows:

CREATE TABLE tab (key integer PRIMARY KEY, value integer);

Then start a transaction and insert a row:

BEGIN; INSERT INTO tab VALUES (1, 1);

In a concurrent session, run a MERGE statement:

MERGE INTO tab USING (SELECT 1 AS key, 2 AS value) AS source ON source.key = tab.key WHEN MATCHED THEN UPDATE SET value = source.value WHEN NOT MATCHED THEN INSERT VALUES (so[...]

Contributions for week 8, 2026

Posted by Cornelia Biacsics in postgres-contrib.org on 2026-03-02 at 14:20

Prague PostgreSQL Meetup met on Monday, February 23 for the February Edition - organized by Gulcin Yildirim Jelinek & Mayur B.

Speakers:

- Damir Bulic

- Mayur B.

- Radim Marek

- Josef Šimánek

On Wednesday, February 25 2026 Raphael Salguero & Borys Neselovskyi delivered a talk at DOAG DBTalk Database: Operating PostgreSQL with high availability

On Thursday, 26 February, the 1st PgGreece Meetup happened - it was organized by Charis Charalampidi.

Speakers:

- Charis Charalampidi

- Eftychia Kitsou

- Florents Tselai

- Panagiotis Stathopoulos

The POSETTE 2026 Call for Paper Committee met to finalize and published the schedule :

- Claire Giordano

- Daniel Gustafsson

- Krishnakumar “KK” Ravi

- Melanie Plageman

PGConf.de 2026 Call for Paper Committee met to finalize and publish the schedule:

- Christoph Berg

- Josef Machytka

- Olga Kramer

- Polina Bungina

- Priyanka Chatterjee

pgdsat version 2.0

Posted by Gilles Darold in HexaCluster on 2026-03-01 at 04:04

Developer U: Exercising Cohesion and Technical Skill in PostgreSQL

Posted by Floor Drees in EDB on 2026-02-27 at 17:04

Open Source, Open Nerves

Posted by Vibhor Kumar on 2026-02-27 at 10:22

Trust, Governance, Talent, and the Enterprise Reality of PostgreSQL

Last year at the CIO Summit Mumbai, I had the opportunity to participate in a leadership roundtable with CIOs across banking, fintech, telecom, manufacturing, and digital enterprises.

The session was not a product showcase.

It wasn’t a benchmarking debate.

It wasn’t even primarily about technology.

It was about risk.

Specifically, the evolving role of open source — and particularly PostgreSQL — inside mission-critical enterprise environments.

Over the past week, I revisited those conversations in a LinkedIn series titled “Open Source, Open Nerves.” This blog expands on that series, capturing the deeper strategic undercurrents that surfaced in that room — and why they matter even more today.

The Evolution of the Conversation

There was a time when open source debates revolved around performance and cost. That time has passed.

PostgreSQL has proven itself across:

- Core banking workloads

- Telecom billing engines

- Financial risk platforms

- Regulatory reporting systems

- AI-driven analytics platforms

- Hybrid and multi-cloud architectures

No one in the room questioned whether PostgreSQL could handle enterprise-grade workloads.

The real conversation had shifted.

From capability

to accountability.

1. Trust Is the Real Architecture

One recurring sentiment defined the tone of the discussion:

“Power is no longer the question. Trust is.”

CIOs are not evaluating features in isolation. They are evaluating consequences.

When PostgreSQL becomes the backbone of a regulated enterprise system, the stakes include:

- Revenue continuity

- Regulatory exposure

- Customer trust

- Brand reputation

- Executive accountability

Trust in this context has multiple dimensions:

Operational Trust

Will it stay up under stress?

Will failover behave as designed?

Will replication hold during peak load?

Security Trust

Is the

[...]How Patroni Brings High Availability to Postgres

Posted by Shaun Thomas in pgEdge on 2026-02-27 at 05:33

Let’s face it, there are a multitude of High Availability tools for managing Postgres clusters. This landscape evolved over a period of decades to reach its current state, and there’s a lot of confusion in the community as a result. Whether it’s Reddit, the Postgres mailing lists, Slack, Discord, IRC, conference talks, or any number of venues, one of the most frequent questions I encounter is: How do I make Postgres HA?My answer has been a steadfast “Just use Patroni,” since about 2017. Unless something miraculous happens in the Postgres ecosystem, that answer is very unlikely to change. But why? What makes Patroni the “final answer” when it comes to Postgres and high availability? It has a lot to do with how Patroni does its job, and that’s what we’ll be exploring in this article.

The elephant in the room

By itself, Postgres is not a cluster in the sense most people visualize. They may envision a sophisticated mass of interconnected servers, furiously blinking their lights at each other, aware of each computation the others make, ready to take over should one fail. In reality, the “official” use of the word “cluster” in the Postgres world is just one or more databases associated with a single Postgres instance. It’s right in the documentation for Creating a Database Cluster.“A database cluster is a collection of databases that is managed by a single instance of a running database server.”The concept of multiple such instances interacting is so alien to Postgres that it didn’t even exist until version 9.0 introduced Hot Standbys and streaming replication back in 2010. And how do hot standby instances work? The same way as the primary node: they apply WAL pages to the backend heap files. Those WAL pages may be supplied from archived WAL files or by streaming them from the primary itself, but it’s still just continuous crash recovery by another name.This matters because each Postgres node still knows little to nothing about other nodes in this makeshift cluster over 15 years later. This isn’t necessarily a p[...]PostgreSQL Statistics: Why queries run slow

Posted by Radim Marek on 2026-02-26 at 23:01

Every query starts with a plan. Every slow query probably starts with a bad one. And more often than not, the statistics are to blame. But how does it really work? PostgreSQL doesn't run the query to find out — it estimates the cost. It reads pre-computed data from pg_class and pg_statistic and does the maths to figure out the cheapest path to your data.

In ideal scenario, the numbers read are accurate, and you get the plan you expect. But when they are stale, the situation gets out of control. Planner estimates 500 rows, plans a nested loop, and hits 25,000. What seemed as optimal plan turns into a cascading failure.

How do statistics get stale? It can be either bulk load, a schema migration, faster-than-expected growth, or simply VACUUM not keeping up. Whatever the cause, the result is the same. The planner is flying blind. Choosing paths based on reality that no longer exists.

In this post we will go inside the two catalogs the planner depends on, understand what ANALYZE actually gets for you from a 30,000-row table, and see how those numbers determine whether your query takes milliseconds or minutes.

Sample schema

For demonstration purposes we will use the same schema as in the article Reading Buffer statistics in EXPLAIN output.

CREATE TABLE customers (

id integer GENERATED ALWAYS AS IDENTITY PRIMARY KEY,

name text NOT NULL

);

CREATE TABLE orders (

id integer GENERATED ALWAYS AS IDENTITY PRIMARY KEY,

customer_id integer NOT NULL REFERENCES customers(id),

amount numeric(10,2) NOT NULL,

status text NOT NULL DEFAULT 'pending',

note text,

created_at date NOT NULL DEFAULT CURRENT_DATE

);

INSERT INTO customers (name)

SELECT 'Customer ' || i

FROM generate_series(1, 2000) AS i;

INSERT INTO orders (customer_id, amount, status, note, created_at)

SELECT

(random() * 1999 + 1)::int,

(random() * 500 + 5)::numeric(10,2),

(ARRAY['pending','shipped','delivered','cancelled'])[floor(random()*4+1)::int],

CASE WHEN random() < 0.3 THEN 'Some note text hereA reponsible role for AI in Open Source projects?

Posted by Alastair Turner in Percona on 2026-02-26 at 14:00

AI-driven pressure on open source maintainers, reviewers and, even, contributors, has been very much in the news lately. Nobody needs another set of edited highlights on the theme from me. For a Postgres-specific view, and insight on how low quality AI outputs affect contributors, Tomas Vondra published a great post on his blog recently, which referenced an interesting talk by Robert Haas at PGConf.dev in Montreal last year. I won’t rehash the content here, they’re both quite quick reads and well worth the time.

The real cost of random I/O

Posted by Tomas Vondra on 2026-02-26 at 13:00

The random_page_cost was introduced ~25 years ago, and since the very beginning it’s set to 4.0 by default. The storage changed a lot since then, and so did the Postgres code. It’s likely the default does not quite match the reality. But what value should you use instead? Flash storage is much better at handling random I/O, so maybe you should reduce the default? Some places go as far as recommending setting it to 1.0, same as seq_page_cost. Is this intuition right?

Postgres JSONB Columns and TOAST: A Performance Guide

Posted by Paul Ramsey in Crunchy Data on 2026-02-25 at 15:05

Postgres has a big range of user-facing features that work across many different use cases — with complex abstraction under the hood.

Working with APIs and arrays in the jsonb type has become increasingly popular recently, and storing pieces of application data using jsonb has become a common design pattern.

But why shred a JSON object into rows and columns and then rehydrate it later to send it back to the client?

The answer is efficiency. Postgres is most efficient when working with rows and columns, and hiding data structure inside JSON makes it difficult for the engine to go as fast as it might.

JSONB in Postgres

How does JSON work in a database like Postgres that is optimized for rows and columns?

Like the text, bytea and geometry types, the jsonb type is "variable length" — there is no limit to how big it can be.

Under the covers, the PostgreSQL database stores all data in fixed-size 8-KB pages. But how can a data type with no size limit, like jsonb, exist in a database with such a small fixed storage size limit?

It does this by using The Oversize Attribute Storage Technique, aka "TOAST."

Ordinarily, all the attributes in a row can fit inside a page.

But sometimes, one or more of the attributes are too big to fit.

Under the covers, PostgreSQL quietly cuts up the big attributes, puts them in a side table and replaces them in the original page with a unique identifier.

So even for large attributes, the user does not have to do anything special to store them. The database abstraction remains intact. Or does it?

JSONB column types are convenient, but are they fast?

We are going to test JSONB performance for differently sized documents. The function generate_item_json will generate a jsonb object of arbitrary size.

The item_description can be expanded to make the object too big to fit on a page. This example generates a JSON object with a 40-byte description.

Here we create a 10,000-row table with four columns, a key, a name and price, and the o

[...]February Meetup: slides and recording are available!

Posted by Henrietta Dombrovskaya on 2026-02-25 at 11:30

Thank you, Shaun, for presenting, and huge thanks to all participants for an engaging and productive discussion!

As always, I am glad that people from all over the world can join us virtually, but if you are local, consider coming next time! We have pizza, and you can’t consume it virtually!

Semantic Caching in PostgreSQL: A Hands-On Guide to pg_semantic_cache

Posted by Muhammad Aqeel in pgEdge on 2026-02-25 at 06:03

Your LLM application is probably answering the same question dozens of times a day. It just doesn't realize it because the words are different each time.

The Problem with Exact-Match Caching

If you're running an AI-powered application like a chatbot, a RAG pipeline, an analytics assistant, or others, you've likely added a cache to cut down on expensive LLM calls. Most caches work by matching the exact query string. Same string, cache hit. Different string, cache miss.The trouble is that humans don't repeat themselves verbatim. These three queries all want the same answer:A traditional cache sees three unique strings and triggers three separate LLM calls. In production AI applications, research shows that 40-70% of all queries are semantic duplicates: different words, same intent. That translates directly into wasted API calls, wasted latency, and a bloated cloud bill.Semantic caching fixes this by matching on meaning instead of text. It uses vector embeddings to recognize that "Q4 revenue" and "last quarter's sales" are asking for the same thing, and serves the cached result in milliseconds instead of making another round trip to the LLM.pg_semantic_cache is a PostgreSQL extension that brings this capability directly into your database. In this post, we'll set it up from scratch in a Docker container using pgEdge Enterprise Postgres 17 and walk through working examples you can run yourself.What You'll Build

By the end of this post, you'll have:- A Docker container running pgEdge Enterprise Postgres 17 with pgvector and pg_semantic_cache.

- A working semantic cache that matches queries by meaning.

- Hands-on experience with caching and retrieval.

- A clear understanding of how semantic similarity matching works in practice.

Setting Up the Environment

For our example, we'll use a Rocky Linux 9 container with pgEdge Enterprise Postgres 17, which bundles pgvector out of the box.Dockerfile

First, we create a file called Dockerfile that defines the content of our container:Bui

[...]PGConf.DE 2026 - The schedule for PGConf.DE 2026 is now live!

Posted by Daniel Westermann in PostgreSQL Europe on 2026-02-24 at 16:45

Discover the exciting lineup of PostgreSQL speakers and exciting topics that await you on the schedule for this year.

See you in Essen in April

Create your PostgreSQL clusters with the "builtin" C collation!

Posted by Laurenz Albe in Cybertec on 2026-02-24 at 05:18

A while ago, I wrote about the index corruption that you can get after an operating system upgrade, and recently I detailed how to keep the pain of having to rebuild indexes minimal. Since this is an embarrassing problem that keeps resurfacing, here is my recommendation on how to avoid the problem entirely by using the C collation.

Management summary of PostgreSQL's collation problem

For those who cannot be bothered to follow the links above: PostgreSQL by default uses the locale support provided by external libraries, either the C library or the ICU library. One aspect of locale is the collation, the rules to compare and sort strings. Upgrading the operating system will upgrade the C and ICU libraries. Sometimes such upgrades will change the collation rules. As a consequence, indexes on string expressions may suddenly end up sorted in the wrong way, which means index corruption.

To deal with the problem, you have to rebuild affected indexes after an operating system upgrade.

Why does PostgreSQL have this problem, when other databases don't?

There are two reasons:

- Many other databases implement their own collations, so they can avoid breaking changes. When commit 5b1311acfb introduced locale support in 1997, it simply saved development effort by using an existing implementation. In retrospect, this may not have been the best decision.

- Many other databases use the C collation by default, which avoids the problem altogether. PostgreSQL takes the default value for the locale from the environment of the shell where you run

initdbto create the cluster. As a consequence, you often end up using a natural language collation with PostgreSQL.

Why are natural language collations subject to changes, while the C collation is stable?

The pleasant simplicity of the C collation

The C collation is very simple: strings are compared byte by byte, and the numerical value determines the order. That means that a simple call to memcmp() can determine the sorting order of two strings

Row Locks With Joins Can Produce Surprising Results in PostgreSQL

Posted by Haki Benita on 2026-02-23 at 22:00

Here's a database riddle: you have two tables with data connected by a foreign key. The foreign key field is set as not null and the constraint is valid and enforced. You execute a query that joins these two tables and you get no results! How is that possible? We thought it wasn't possible, but a recent incident revealed an edge case we never thought about.

In this article I show how under some circumstances row locks with joins can produce surprising results, and suggest ways to prevent it.

Image by abstrakt design

The Problem

Imagine you work in the DMV and you are in charge of managing car ownership. You have two tables:

db=# CREATE TABLE owner (

id int PRIMARY KEY,

name text NOT NULL

);

CREATE TABLE

db=# CREATE TABLE car (

id int PRIMARY KEY,

owner_id int NOT NULL,

CONSTRAINT car_owner_id_fk FOREIGN KEY (owner_id) REFERENCES owner(id)

);

CREATE TABLE

Add a car and some potential owners:

db=# INSERT INTO owner (id, name) VALUES

(1, 'haki'),

(2, 'jerry'),

(3, 'george')

RETURNING *;

id │ name

────┼───────

1 │ haki

2 │ jerry

3 | george

(3 rows)

INSERT 0 2

db=# INSERT INTO car (id, owner_id) VALUES(1, 1) RETURNING *;

id │ owner_id

────┼──────────

1 │ 1

(1 row)

INSERT 0 1

You have three owners - "haki", "jerry" and "george", and a single car with id 1 which is currently owned by "haki".

Changing Owner

[...]Contributions for week 7, 2026

Posted by Cornelia Biacsics in postgres-contrib.org on 2026-02-23 at 08:48

New podcast episode “Why it's fun to hack on Postgres performance“ with Tomas Vondra published on February 20 2026 by Claire Giordano and Aaron Wislang from the series “Talking Postgres”.

Hyderabad PostgreSQL User Group met on February 20, organized by Hari Kiran, Ameen Abbas and Rajesh Madiwale.

Speaker:

- Ameen Abbas

- Soqra Banu(sughra) Rumi

- Ashoka Reddy Tatiparthi

- Vikas Gupta

The programme committee for PGConf.dev 2026 finalized a part of the conference schedule for Wednesday, Thursday and Friday.

- Melanie Plageman (Microsoft)

- Dilip Kumar (Google)

- Jonathan Katz (Databricks)

- Paul Ramsey (Snowflake)

- Jacob Champion (EDB) (Tuesday sessions will follow).

PGConf.de 2026 talk selection committee met to finalize the session list:

- Christoph Berg

- Josef Machytka

- Olga Kramer

- Polina Bungina

- Priyanka Chatterjee

Openclaw is Spam, Like Any Other Automated Email

Posted by Jeremy Schneider on 2026-02-23 at 01:23

Open Source communities are trying to quickly adapt to the present rapid advances in technology. I would like to propose some clarity around something that should be common sense.

Automated emails are spam. They always have been. Openclaw (and whatever new thing surfaces this summer) is no different.

Policies saying automated emails/messages are banned – including anything AI generated – are not only common-sense policies, they aren’t even a change from how we’ve always worked. This includes automated comments on github issues, automated PRs, automated patch submissions, and even any kind of automated review. Copilot automated reviews, snyk, etc – are ok if and only if it’s configured by the owners of the repo/project. Common sense.

Enforcement of these policies – more than ever – depends on trust and relationships. I do think, for example, that non-native-english-speakers should be allowed to use AI to help them check their english. Used responsibly, AI tools can help a lot with language learning! Your grammar checker is probably using some kind of LLM anyway. But I’m saying that a human always presses the “send” button on the message, and this human is responsible for the words they sent. If moderators suspect automated messages, every open source project should have a policy they can cite for blocking/banning the account.

Tomas Vondra’s article “the AI inversion” is the latest of many good and thought-provoking pieces I’ve read – it’s well worth the read – although he’s getting at deeper problems than what I’m writing about here – and he has very good reasons to have a much deeper level of concern for the impact of AI tooling on open source communities. These are interesting times and we don’t have all the answers yet.

A few more things I’ve recently read, which I think are good:

- CloudNativePG AI policy – https://github.com/cloudnative-pg/governance/blob/main/AI_POLICY.md

- Linux Foundation AI Policy – https://www.linuxfoundation.org/legal/generative-ai

- Oxide RFD – https://rf

What Does INSERT 0 1 Actually Tell You?

Posted by Lætitia AVROT on 2026-02-23 at 00:00

Upgrading PostgreSQL in place on FreeBSD

Posted by Dan Langille on 2026-02-22 at 16:11

I’ve updated one of my PostgreSQL instances to PostgreSQL 18, it’s time to update the others. This time, I’m going to try pg_update. My usual approach is pg_dump and pg_restore.

As this is my first attempt doing this, I’m posting this mostly for future reference when I try this again. There will be another blog post when I try this again. Which should be soon. This paragraph will link to that post when it is available.

In this post:

- FreeBSD 15.0

- PostgreSQL 16.12 (pg03)

- PostgreSQL 18.2 (pg02)

The names in (brackets) are the names of the jail in question.

If you’re upgrading in place, and not copying data around like me, skip down until you see Saving the old binaries.

I’m reading http://www.unibia.com/unibianet/freebsd/upgrading-between-major-versions-postgresql-freebsd and thinking this might work well for me.

The overview of upgrade-in-place

The PostgreSQL upgrade-in-place needs these main parts:

- The old binaries (e.g. postgresql16-server-16.12.pkg)

- The new binaries (postgresql18-server-18.2.pkg)

- The old data (/var/db/postgres/data16)

Keep that in mind as I go through this. We can’t install both packages at once, so we’ll untar the old package into a safe location.

How you get that package: up to you. Try /var/cache/pkg, or the FreeBSD package servers, or (while you still have the old package), run pkg create postgresql16-server (for example).

My data

Ignore this section if you have the data. For me, I’m testing this process, and I’m documenting this part here.

This is how the data is laid out. My idea: snapshot line 7 and use it in line 12.

[18:23 r730-01 dvl ~] % zfs list | grep pg data02/jails/pg01 34.9G 175G 10.8G /jails/pg01 data02/jails/pg02 12.7G 175G 11.6G /jails/pg02 data02/jails/pg03 11.5G 175G 10.8G /jails/pg03 data03/pg01[...]

Is Oracle-Compatible PostgreSQL Actually Useful?

Posted by Ruohang Feng on 2026-02-22 at 00:00

Waiting for PostgreSQL 19 – Allow log_min_messages to be set per process type

Posted by Hubert 'depesz' Lubaczewski on 2026-02-21 at 19:05

Waiting for PostgreSQL 19 – psql: Add %i prompt escape to indicate hot standby status.

Posted by Hubert 'depesz' Lubaczewski on 2026-02-21 at 14:51

Per-worker, and global, IO bandwidth in explain plans

Posted by Hubert 'depesz' Lubaczewski on 2026-02-21 at 13:24

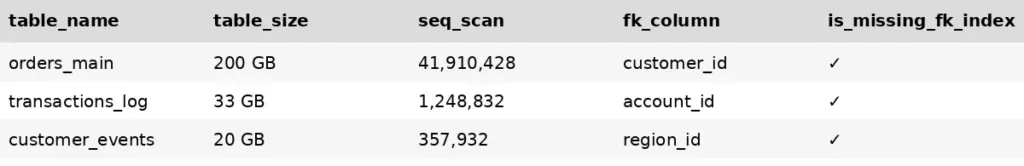

Fixing ORM Slowness by 80% with Strategic PostgreSQL Indexing

Posted by Hamza Sajawal in Stormatics on 2026-02-20 at 11:41

The AI inversion

Posted by Tomas Vondra on 2026-02-20 at 10:00

If you attended FOSDEM 2026, you probably noticed discussions on how AI impacts FOSS, mostly in detrimental ways. Two of the three keynotes in Janson mentioned this, and I assume other speakers mentioned the topic too. Moreover, it was a very popular topic in the “hallway track.” I myself chatted about it with multiple people, both from the Postgres community and outside of it. And the experience does not seem great …

Inside PostgreSQL's 8KB Page

Posted by Radim Marek on 2026-02-19 at 21:45

If you read previous post about buffers, you already know PostgreSQL might not necessarily care about your rows. You might be inserting a user profile, or retrieving payment details, but all that Postgres works with are blocks of data. 8KB blocks, to be precise. You want to retrieve one tiny row? PostgreSQL hauls an entire 8,192-byte page off the disk just to give it to you. You update a single boolean flag? Same thing. The 8KB page is THE atomic unit of I/O.

But knowing those pages exist isn't enough. To understand why the database behaves the way it does, you need to understand how it works. Every time you execute INSERT, PostgreSQL needs to figure out how to fit it into one of those 8,192-byte pages.

The buffer pool caches them, Write-Ahead Log (WAL) protects them, and VACUUM cleans them. The deep dive into the PostgreSQL storage internals starts by understanding what happens inside those 8KB pages. Pages that are used by PostgreSQL to organize all data - tables, indexes, sequences, TOAST relations.

The 8KB

DB_BLOCK_SIZE), though tablespaces with non-standard block sizes can be created separately.

The 8KB page size can be traced down to original Berkley POSTGRES project created in mid-1980s. In those times Unix systems typically used 4KB or 8KB virtual memory pages, and disk sectors were 512 bytes. Choosing 8KB meant a single database page mapped cleanly to OS memory pages and aligned well with filesystem I/O.

And the math still works today. Modern Linux kernels manage memory in 4KB virtual memory pages. SSDs now use 4KB physical sectors instead of 512 bytes. The default filesystem block size on ext4 and XFS is 4KB.

[...]Building Ask Ellie: A RAG Chatbot Powered by pgEdge

Posted by Dave Page in pgEdge on 2026-02-19 at 07:59

If you've visited the pgEdge documentation site recently, you may have noticed a small elephant icon in the bottom right corner of the page. That's Ask Ellie; our AI-powered documentation assistant, built to help users find answers to their questions about pgEdge products quickly and naturally. Rather than scrolling through pages of documentation, you can simply ask Ellie a question and get a contextual, accurate response drawn directly from our docs.What makes Ellie particularly interesting from an engineering perspective is that she's built on PostgreSQL and pgEdge's ecosystem of extensions and tools, and she serves as both a useful tool for our users and a real-world demonstration of what you can build on top of PostgreSQL when you pair it with the right components. In this post, I'll walk through how we built her and the technologies that power the system.

The Architecture at a Glance

At its core, Ask Ellie is a Retrieval Augmented Generation (RAG) chatbot. For those unfamiliar with the pattern, RAG combines a traditional search step with a large language model to produce answers that are grounded in actual source material, rather than relying solely on the LLM's training data. This is crucial for a documentation assistant, because we need Ellie to give accurate, up-to-date answers based on what's actually in our docs, not what the model happens to remember from its training set.The architecture breaks down into several layers:- Content ingestion

- : crawling and loading documentation into PostgreSQL

- Embedding and chunking

- : automatically splitting content into searchable chunks and generating vector embeddings

- Retrieval and generation

- : finding relevant chunks for a user's query and generating a natural language response

- Frontend

- : a chat widget embedded in the documentation site that streams responses back to the user

Loading the Documentation

The first challenge with any RAG system is getting your content into a form[...]Hacking Workshop for March 2026

Posted by Robert Haas in EDB on 2026-02-19 at 02:43

For next month's hacking workshop, I'm scheduling 2 or 3 discussions of Tomas Vondra's talk, Performance Archaeology, given at 2024.PGConf.EU. If you're interested in joining us, please sign up using this form and I will send you an invite to one of the sessions. Thanks as always to Tomas for agreeing to attend the sessions.

Read more »Top posters

Number of posts in the past two months

Hubert 'depesz' Lubaczewski - 8

Hubert 'depesz' Lubaczewski - 8 Zhang Chen - 7

Zhang Chen - 7 Lætitia AVROT - 7

Lætitia AVROT - 7 Jimmy Angelakos - 5

Jimmy Angelakos - 5 Cornelia Biacsics (postgres-contrib.org) - 5

Cornelia Biacsics (postgres-contrib.org) - 5 Dave Page (pgEdge) - 5

Dave Page (pgEdge) - 5 Radim Marek - 5

Radim Marek - 5 Ian Barwick - 4

Ian Barwick - 4 Henrietta Dombrovskaya - 4

Henrietta Dombrovskaya - 4 Jeremy Schneider - 4

Jeremy Schneider - 4

Top teams

Number of posts in the past two months

- pgEdge - 14

- HexaCluster - 9

- EDB - 7

- Cybertec - 7

- Stormatics - 5

- postgres-contrib.org - 5

- Percona - 5

- Dalibo - 2

- PostgreSQL Europe - 2

- Xata - 2

Feeds

Planet

- Policy for being listed on Planet PostgreSQL.

- Add your blog to Planet PostgreSQL.

- List of all subscribed blogs.

- Manage your registration.

Contact

Get in touch with the Planet PostgreSQL administrators at planet at postgresql.org.